In this document, one presents the manual of FeResPost.

When sizing a structure with FE software, the engineer is often lead to use or develop tools that automate the computation of margins of safety, reserve factors or other results from outputs obtained with a FE solver. Then the engineer is facing several problems:

He has to take into account the possibility of modifications of the structure and of its corresponding finite element model during the project.

Also, the allowables may change during the course of the project.

Sometimes, the calculation methods are imposed by a client or a methodology department in the company, and these methods may also be modified, or be defined concurrently with the sizing activities.

For long duration projects, the members of the calculation team are often displaced as the work proceeds, and time is lost to transfer the information from one engineer to another.

All these problems induce difficulties in the management of the automated post-processing. These must be as simple and cleanly written as possible to allow an easy understanding by everyone. Also their architecture must be flexible to make the modifications easy to implement and reduce the risk of errors.

The problems mentioned above are very similar to the kind of problems a programmer is facing when writing a large program. To help the programmer in this task, the object-orientation has emerged as a new programming language concept. Object-oriented languages allow the development of more flexible programs with a better architecture and allow the re-usability of code. Many object-oriented languages are now available. They can be compiled languages (C++, Fortran 90,...) or interpreted ones (Ruby, Python, Visual Basic,...).

FeResPost is a compiled library devoted to the programming of automated post-processing of finite element results. It provides the definition of several classes and one module. It uses object orientation at two levels:

With object-oriented languages, it allows to write interpreted and object-oriented automated post-processing. An example of such an object-oriented post-processing program is given in Chapter IV.4.

The FeResPost library is mainly written in C++, which is also an object-oriented language. Then a Ruby wrapping is provided around the C++ code.

During the development of FeResPost, the developer has been trying to maintain as much as possible the simplicity and clarity of the language in which post-processing programs are written.

FeResPost can be accessed from different languages and on different platforms:

As a ruby extension. Binaries are available for Windows and Linux distributions of ruby.

As a Python copiled library on Windows and Linux.

As a COM component on Windows. Then, the library can be used with different languages that support COM interface: C, C++, ruby, Python, VBA...

As a .NET assembly that allows the programming with VB.NET, C++.NET, C#...

One gives here some advice to people starting to use FeResPost. The order in which the knowledge is acquired matters. One of the worst way to try to learn FeResPost is to read the manual while writing bits of code meant to solve the particular problem the user has in mind. Instead, one suggests the following sequence of knowledge acquisition:

FeResPost is an extension of ruby programming language. This means that the examples provided in the document are small ruby programs. Therefore a basic knowledge of ruby is necessary. People trying to learn ruby and FeResPost at the same time will probably fail in both tasks.

Very good books on ruby language are available in libraries. Internet resources are also a source of information (newsgroups, tutorials with examples...).

Note that people already familiar with one or several object-oriented programming languages will have no difficulty to acquire a basic knowledge of ruby.

Then, the user may test FeResPost by running the small examples. These are provided in the sub-directories in “RUBY” directory. Note that the Nastran bdf files should first be run in order to have the op2 result files available. It may be a good idea to try first to understand the structure of the bdf files and of the organization of the finite element model.

The small examples are meant to be increasingly difficult. So, the user should first run the examples in “EX01” directory, then in “EX02”... For each example, every statement should be understood, and the corresponding pages of the user manual should be carefully read.

When all the small examples have been run and understood, the user will probably have acquired a reasonable understanding of the various capabilities of FeResPost. Then it may be a good idea to start to read the user manual. For example, to read a few pages each day in such a way that the information can be digested efficiently.

The two examples “PROJECTa” and “PROJECTb” illustrate the programming of more complex post-processing of Results involving loops on load-cases, on several types of post-processing calculations... These two projects should be studied at the very end.

“PROJECTa” is meant to be studied before “PROJECTb”. Indeed, “PROJECTa” is easier to understand than “PROJECTb”, because it is less object-oriented. but it is also less complete and less nice from a programming point of view.

The reason why the advices above is given is that many users send mails of questions or complaints because they fail to understand something about FeResPost which is clearly illustrated in the examples. Sometimes, the problems faced by the users are simply related to a lack of understanding of the ruby programming language.

This document is organized as follows:

In Part I one presents the various classes defined in FeResPost library, and their member functions. One emphasizes the definition of the concepts to which they correspond, and their relevance for the developments of post-processing tools.

Part II is devoted to the presentation of the classes devoted to composite calculations with the Classical Laminate Analysis Theory.

Part III is devoted to the presentations of the preferences for the different solvers supported by FeResPost.

In Part IV, several examples of post-processing written with the ruby library are presented.

Part VI contains the description of FeResPost COM component. This Part is less detailed as most methods in COM component have the same characteristics as the corresponding methods in ruby extension.

Examples of programs with the COM component are given in Part VII.

Part VIII contains the description of FeResPost NET assembly. Here again, the description is shortet than for the ruby extension.

Examples of programs with the NET assembly are given in Part IX.

Part V contains the description of FeResPost Python library. Both the library and the examples are described in that part which is vry short as the Python and ruby languages are very similar.

Part X contains the annexes.

References are given in Part XI.

A list of the different classes defined in FeResPost with pointers to Tables listing the methods defined by these classes is given in Table 1.

| Class | Chapter | Table | Page |

| “Common” Classes | |||

| DataBase | I.1 | I.1.1 | 52 |

| CoordSys | I.2 | I.2.1 | 79 |

| Group | I.3 | I.3.1 | 91 |

| Result | I.4 | I.4.1 | 100 |

| ResKeyList | I.5 | I.5.1 | 179 |

| Post (module) | I.6 | I.6.1 | 186 |

| Classical Laminate Analysis

| |||

| ClaDb | II.2 | II.2.1 | 459 |

| ClaMat | II.3 | II.3.1 | 468 |

| ClaLam | II.4 | II.4.1 | 478 |

| ClaLoad | II.5 | II.5.1 | 513 |

| Solver Preferences

| |||

| NastranDb | III.1 | III.1.1 | 534 |

| SamcefDb | III.2 | III.2.1 | 670 |

FeResPost is still at the very beginning of its development and much work is still necessary to cover a wider range of applications. One gives below a few examples of possible improvements, more or less sorted by order of emergency or facility:

Correction of bugs...

Addition of specialized post-processing modules programmed at C++ level to provide efficiency. For examples:

A module devoted to fatigue and damage tolerance analysis.

A module devoted to the calculation of stresses in bar cross-sections from the bar forces and moments.

...

Extension of FeResPost by providing interfaces towards other FE software like Abaqus,...

...

Of course, we are open to constructive remarks and comments about the ruby library in order to improve it.

.NET515–527, 531–535

Abaqus6

abbreviations47–48, 314

Acceleration of computation118–119, 361–362, 575–585, 608

asef317

Autodesk266, 697

Bacon313, 314, 495

bacon314

banque313–314

BBBTsee Binary Blocked Balanced Tree, see Binary Blocked Balanced Tree

big endian275–277

Binary Blocked Balanced Tree270

BLOB65, 101, 115, 381–383, 436, 473, 492, 497–498, 500–501, 535

bolt group621

bolt group(617

bridge609–615

C467

C++62, 67, 70, 465–466

CLA449–450, 521–522

ClaDb125, 203–208, 449, 521

ClaLam125, 217–235, 450, 522

ClaLoad125, 237–243, 450, 522

ClaMat125, 209–215, 449–450, 521

Classical Laminate Analysis449–450, 521–522

code327–330

COM441–455, 459–512, 609

Combined strain criterion182

Complex, Complex98–100

Component Object Model441–455, 459–512

Composite48–49, 125–243

CoordSys35, 53–59, 263–264, 314–315, 366

COPYING623–637

CRMS296

DataBase35, 37–52, 92–94

DataBase, flags, readDesFac318

DataBase, flags, readDesFac318

DataBase, key43–44

des316–324

disable269, 276, 318

dynam317

ECMAScript268

EDS266, 268

enable269, 276, 318

endiannes275–277

EQEXINE286

EQEXING286

Equivalent Strain134, 166, 181, 182

ESAComp125, 207

excel413–415, 423, 425–426, 443, 472–473, 475–489, 491–512, 609–615

exception119, 433, 447

fac316–324

FEM265

FeResPost35

Fick199

FieldCS73, 75, 93, 366

finite elements35

Fourrier195

General Public License623–637

Gmsh50–52, 381, 492–495, 497–498, 500–501, 577

GNU543

GPL623–637

Group35, 37, 41–43, 61–66, 80, 109, 264–265, 315–316, 354–357

Hash Key271

Hashin criteria189–191

HD5119, 389–390, 395–396, 435–436

HDF119, 257–259, 288–293, 389–390, 395–396, 435–436, 542

HKsee Hash Key, see Hash Key

Hoffman criterion186

Honeycomb criterion193–194

I-DEAS266, 268

identifier125

IDispatch444, 466

Ilss criterion194–195

Ilss_b criterion194–195

include256

instance method436, 605

Inventor Nastran266, 697

iterator49–50, 65, 101–102, 107–108, 293–294, 325

iterators357–358

keysee DataBase, key, see Result, key

lambda436

layersee Result, layer

Lesser General Public License623–637

LGPL623–637

little endian275–277

Makefile542

Maximum strain criterion181–182

Maximum stress criterion180

mecano317

Mechanical Strain134, 166, 181, 182

Microsoft444, 466

Microsoft Office414, 425–426

MINGW543

Mohr83, 84

MSYS543

Nastran64, 198, 249–309, 335

NET515–527, 531–535

NX314

op2257–259, 265–269, 295–309, 392–393

Patran35, 41, 42, 61, 63, 97, 307, 344

Post35, 81, 89, 109–119

post-processing35

Predefined criterion118–119, 361–362, 575–585, 608

proc436, 604

PSD115–118, 296, 371–372

Puck criterion187

Puck_b criterion187–188

Puck_c criterion188

Python431–437

python461–463

random115–118, 270

random access277–288, 319–324

Regular expression267–269

Reserve Factor176

ResKeyList35, 71, 76, 78, 79, 105–108

Result35, 37, 38, 43–47, 67–103, 105, 109, 112, 119, 270–293, 295–309, 319–324, 358–372

Result, Complex98–100

Result, key70–71, 76, 79, 94, 100–102, 105, 108, 360

Result, layer70–71

Result, value44, 70, 73–74, 79, 100–102

Results563–571

results35

rfalter256

rfinclude256

RMSINT117

ruby35, 67, 464

Samcef47, 311–331, 483, 489, 492, 494–496

scalar73, 78, 79, 81–84, 87, 92, 94, 96, 97, 100

Siemens314

SQL65, 101, 115, 381–383, 436, 473, 492, 497–498, 500–501, 535

SQLite381–383, 436, 473, 492, 497–498, 500–501, 535

stabi317

Strength Ratio176

superelement257–259, 390–396

tensorial73, 83–85, 87, 92, 94, 100

Tresca criterion178–179

Tsai-Hill criterion183–184

Tsai-Wu criterion184–186

tuple432, 436

Unigraphics266, 268

valuesee Result, value

VBA413, 443, 472–473, 475–489, 491–512, 609–615

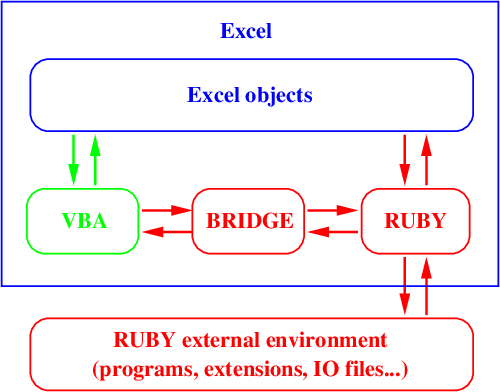

VBA-Ruby bridge609–615

VBscript464

vectorial73, 82, 83, 87, 92, 94, 100

Von Mises83, 84

Von Mises criterion179

word414, 425–426

xdb257–259, 270–288, 295–309, 393–395, 435–436

Yamada-Sun criterion192

FeResPost is a library that allows the manipulation of finite element entities and results. Its purpose is to ease the development of post-processing programs. The supported solvers and corresponding program preferences are discussed in Part III of the document.

The various capabilities implemented in the ruby extension are mainly inspired by Patran capabilities. Several types of objects can be manipulated:

The “DataBase” class corresponds to the Patran concept of dataBase. It is used to store the finite element model definition, the results, the groups,... It also allows to perform operations on the corresponding objects. This class is presented in Chapter I.1.

The “CoordSys” class allows the definition and manipulation of coordinate systems. This class is very practical for some manipulation of Results. It is presented in Chapter I.2.

The “Group” class corresponds to the Patran “group”. This class is presented in Chapter I.3.

The “Result” class is used to retrieve, store, transform finite element results. This class is presented in Chapter I.4.

The “ResKeyList” class is very useful to define lists of entities on which results are to be retrieved. This class is presented in Chapter I.5. Actually, this class is still under construction. However, the ResKeyList objects are already used for the manipulation of Results (section I.4.3).

Finally, additional functions, not member of any class are defined in a module called “Post”. “Post” module is discussed in chapter I.6. In the same chapter one also discusses other topics, as exceptions.

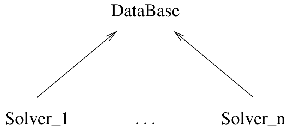

Basically, a “DataBase” class is a container used to store a finite element model, Groups, Results, and other less important entities. The DataBase class also allow to retrieve, manipulate or modify these objects. The DataBase class is a generic class that cannot be instantiated. The specialized classes that inherit the generic DataBase class are described in Part III. Other solvers might be supported in the future. The class hierarchy is schematically represented in Figure I.1.1.

As three classes are represented in Figure I.1.1, the methods described in this Chapter may belong to the generic DataBase class or to the derived classes. A few basic principles should help the user to “guess” in which class some of the methods are defined:

All methods related to the definition of the model stored in the DataBase are defined in the specialized classes.

All methods related to the reading of Results from solvers output files are defined in the specialized classes.

Most methods for the manipulation of Groups and of Results are defined in the generic “DataBase” class.

Throughout the Chapter one specifies for the different methods, in which class they are defined. This Chapter is divided in several sections:

One presents in section I.1.1 the methods devoted to the initialization of the finite element model in the DataBase.

Section I.1.2 is devoted to the DataBase’s methods devoted to the manipulation of Groups.

Section I.1.3 is devoted to the DataBase’s methods devoted to the manipulation of Results.

In section I.1.4, the manipulation of abbreviations stored in the DataBase is described.

The interaction of CLA classes with DataBase classes is discussed in section I.1.5.

The iterators of Class DataBase are described in section I.1.6.

Finally, general purpose methods are presented in section I.1.7.

A list of the methods defined in “DataBase” class is given in Table I.1.1.

No generic “DataBase” object can be created because “DataBase” class cannot be instantiated. This means that a statement like:

db=DataBase.new()

leads to an error message. All the methods described in this section are defined in the specialized versions of the DataBase class. So no “new”, “initialize” or “clone” method is defined in DataBase class.

One defines three methods that allow to retrieve the number of entities of certain types stored in DataBase FE model:

“NbrCoordSys” attribute returns the number of coordinate systems stored in the DataBase.

“NbrElements” attribute returns the number of elements stored in the DataBase.

“NbrNodes” attribute returns the number of nodes stored in the DataBase.

Each of these methods has no argument and returns an integer. Other methods allow to check the existence of finite element entities:

“checkCoordSysExists” returns true if the specified coordinate system exists, false otherwise.

“checkElementExists” returns true if the specified element exists, false otherwise.

“checkNodeExists” returns true if the specified node exists, false otherwise.

“checkRbeExists” returns true if the specified RBE exists, false otherwise.

Each of these four methods has one integer argument corresponding to the entity the existence of which is checked.

Several methods allow to retrieve elements information. Each of the following methods has one integer argument corresponding to the element ID:

“getElementType” returns an integer corresponding to the type ID of the element.

“getElementTypeName” returns a string corresponding to the type name of the element.

“getElementDim” returns an integer corresponding to the topological dimension of the element.

“getElementNbrNodes” returns an integer corresponding to the number of nodes defining the element.

“getElementNbrCornerNodes” returns an integer corresponding to the number of corner nodes defining the element.

“getElementNodes” returns an array of integers that corresponds to the element nodes.

“getElementCornerNodes” returns an array of integers that corresponds to the element corner nodes.

Normally one class corresponds to each solver supported by FeResPost. The preferences for the different supported solvers are described in Part III.

Groups can be stored in, and retrieved from a DataBase object. One presents here the methods defined in generic class DataBase, or in its derived classes, and that are devoted to the manipulation of Group objects. In the DataBase, the Group objects are stored in a mapping associating their names to the Group. This association allows to retrieve the Group when needed.

One makes the distinction between the simple manipulation of Groups described in section I.1.2.1 and the more complicated operation where new Groups are created by association operations (section I.1.2.2). The methods related to these associative operations are often defined in the specialized versions of the Database class.

One describes here methods that allow the manipulation of Groups stored in a generic DataBase object. All the methods described in this section are defined in generic DataBase class. These methods are described below:

“readGroupsFromPatranSession” reads one or several Groups from a Patran session file obtained using the utility “Patran–>utilities–>groups–>exportToSessionFile”. The argument is a String corresponding to the name of the session file. The method returns nil. If some of the entities read in the session file do not exist (for example, missing elements or nodes), then the read entities will not be added to the Group. This corresponds to the behavior of Groups in Patran. Therefore the session file containing the definition of Groups should be read after the finite element model.

Note that, even though the method is related to an MSC software, it can be used with DataBase related to different solvers. This is the reason why the method “readGroupsFromPatranSession” is defined in generic DataBase class.

The method “readGroupsFromPatranSession” works as follows:

It searches in the file the statements “ga_group_entity_add” and stores the while PCL statement in a string.

Then, the name of the Group being initialized is scanned from the string.

Finally, the entity definition is scanned:

The statement searches words like “Node”, “Element”, “MPC”, “CoordSys”. Note that “MPC” corresponds to the definition of a list of rigid body elements (RBEs). Nastran MPC cards cannot be inserted in a Group.

For any of the four first words, the method builds a list of integers corresponding to the identifiers of the entities to be added to the Group. For the three last words above, the method skips the entities.

The entities can be defined by a list of integers separated by blanks, or by pairs of integers separated by “:” which defines a range of integers, or by groups of three integers separated by two “:” which defines a range with a stepping argument.

Prior to storing the Group in DataBase, the method checks that the entities of the Group are defined in the DataBase. If not, the are erased from the Group.

Note that the definition of Groups in Ruby by range specification uses the same kind of formats as ”setEntities” method in Group class. (See section I.3.3.)

“writeGroupsToPatranSession” writes the Groups stored in the DataBase in a text file corresponding to a Patran session defining groups. This method is the reverse of method “readGroupsFromPatranSession” an has one argument: the name of the Patran session file. Note that “MPC” corresponds to the definition of a list of rigid body elements (RBEs). Nastran MPC cards cannot be inserted in a Group.

“addGroupCopy” is used to add a Group to a DataBase. The method returns nil and has one argument: the Group object. Note that, when adding a Group to the DataBase, a check is done to verify that all its entities are present in the DataBase. If not present, then the corresponding entities are erased from the Group. As this involves the modification of the Group definition, all operations are performed on a copy of the Group argument. In the DataBase, the added Group is associated to the name of the group argument. If a Group associated to the same name existed prior to adding the new Group, the method replaces the former Group by the new one.

“NbrGroups” attribute has no argument and returns the number of Groups stored in the DataBase.

“getAllGroupNames” has no argument and returns an Array of String objects corresponding to the names of all the Groups contained in the DataBase on which the method is called.

“checkGroupExists” allows to check whether a Group exists in the DataBase. The argument is a String object corresponding to the name of the Group. The returned value is true if the Group has been found, false otherwise.

“getGroupCopy” has one argument: a String object corresponding to the name of the Group one tries to retrieve. The method returns a copy of the Group stored in the DataBase, if the Group with the appropriate name exists. The method returns an error is no Group with the appropriate name exists in the DataBase.

“eraseGroup” erases a Group from the DataBase. The argument is a String object corresponding to the name of the Group to be erased. If the Group does not exist in the DataBase, nothing is done.

“eraseAllGroups” erases all Groups stored in the DataBase. This method has no argument.

“getGroupAllElements” returns a Group containing all the elements that define the model stored in the DataBase.

“getGroupAllNodes” returns a Group containing all the nodes that define the model stored in the DataBase.

“getGroupAllRbes” returns a Group containing all the RBEs that define the model stored in the DataBase.

“getGroupAllCoordSys” returns a Group containing all the coordinate systems that define the model stored in the DataBase.

“getGroupAllFEM” returns a Group containing all the elements, nodes, rbes and coordinate systems that define the model stored in the DataBase.

This section is devoted to methods that allow the construction of a new Group by selecting entities associated to other entities of the finite element model. For all these methods, the association is checked by inspection of the finite element model stored in the DataBase. Therefore, these methods are defined in the specialized version of the “DataBase” class. Therefore, these methods are systematically defined in specialized versions of the “DataBase” class. For each supported solver, a description of these methods is given. (See Part III.)

As explained in the introduction of this Chapter, the DataBase can be used to store Results. Internally, a mapping between keys and Results allows to associate Result objects to an identifier. Each key1 is characterized by three String objects corresponding respectively to the load case name, to the subcase name and to the Result type name.

The class “Result” is described in Chapter I.4. In this section, one describes the methods of the generic “DataBase” class that deal with “Result” objects. The “Result” methods are:

“getResultLoadCaseNames” is a method without argument that returns an Array containing the names of all the load cases for which Results are stored in the DataBase.

“getResultSubCaseNames” is a method without argument that returns an Array containing the names of all the subcases for which Results are stored in the DataBase.

“getResultTypeNames” is a method without argument that returns an Array containing all the Result identifiers for Results stored in the DataBase.

“checkResultExists” is used to check whether a certain Result is present in the DataBase. The method returns a Boolean value and has three String arguments corresponding to the load case name, the subcase name, and the Result name.

“getResultSize” returns the size of a Result object stored in the DataBase. The method returns an integer value and has three String arguments corresponding to the load case name, the subcase name, and the Result name. If the corresponding Result is not found, “-1” is returned.

“getResultLcInfos” returns the integer and real ids associated to a Result object stored in the DataBase. The method has three String arguments corresponding to the load case name, the subcase name, and the Result name. Integer and real ids are returned in an Array of 4 elements. If specified Result is not found in the DataBase, method returns nil.

“addResult” is used to add a Result to the DataBase. The arguments are three String objects corresponding to the key (load case name, subcase name and Result type name), and the Result object. If a Result with the same key is already present in the DataBase, it is deleted and replaced by the new one.

“generateCoordResults” has three String arguments and builds a Result corresponding to Coordinates of elements and nodes. The arguments correspond to the key to which the Result object is associated in the DataBase. (load case name, subcase name, and Result name respectively.) If the String arguments are omitted, one assumes “”, “” and “Coordinates” for the Result key.

“generateElemAxesResults” has two or three arguments. The two first arguments are the load case name and subcase name to which the produced Results will be associated. These arguments are mandatory. The method produces several vectorial Results:

“Axis 1” corresponding to the first element axis.

“Axis 2” corresponding to the second element axis.

“Axis 3” corresponding to the third element axis.

“Normals” corresponding to the normals to 2D elements.

“Axis” corresponding to the first element axis of 1D elements.

“Coordinates” corresponding to the coordinate Results generated by “generateCoordResults” method.

By default, components are expressed in element axes, except for the “Coordinates” Result which follow the conventions of “generateCoordResults” method. The third argument allows to modify this default behavior by expressing the components in another coordinate system specified by a String or an integer argument. This argument is optional. The use of this option leads to a very significant increase of the computation time of the method.

Note also that the vectorial values are associated to element centers, and element corners. For the “Coordinates” Result, values are also associated to nodes.

“buildLoadCasesCombili” allows the definition of Result by linear combination of other Results. The selection of the new Result and elementary Results is done by load case names. The first argument is a String containing the new load case name of the Results being created. The second argument contains an Array of real numbers corresponding to the factors of the linear combination. The third argument is an Array of Strings containing the name of load cases to which elementary Results are associated. The lengths of the two Array arguments must match.

“renameResults” is used to modify the key by which Results stored in the DataBase can be accessed. The method has two arguments:

An Array of three String objects corresponding to the identification of the Results that must be renamed. If one of the String is void or replaced by nil, then all the Results matching the non void Strings are renamed. Of course, at least one of the three Strings must be non-void.

An Array of three Strings containing the new key identifiers. Strings must be void or nil at the same time as the Strings of “From” argument.

“copyResults” has the same arguments as “renameResults”, and performs nearly the same operation. The difference is that the Result stored in the DataBase is now duplicated, and not simply renamed.

“removeResults” is used to delete Results from the DataBase. This method has two String arguments corresponding to the method of selection of Results and to an identifier respectively. The “Method” argument has three possible values: “CaseId”, “SubCaseId” or “ResId”. It specifies whether the Results to be deleted are identified by their load case name, their subcase name or their Result type name. The second String argument corresponds to the identifier of Results to be deleted.

“removeAllResults” erases all Results stored in the DataBase. This method has no argument.

“getResultCopy” returns a Result object containing a copy of a portion of a Result stored in the DataBase. This method has generally six arguments (see below for other possibilities):

A String argument corresponding to the load case name.

A String argument corresponding to the subcase name.

A String argument corresponding to the Result type name.

A String argument corresponding to the method of selection. Possible values of this argument are listed and explained in Table I.4.6 of section I.4.3. This “Method” string argument can be replaced by a Result or ResKeyList object. Then it correspond to the target entities on which the values are extracted. (See remark below.)

A Group argument corresponding to the Target (selection of elements or nodes on which the Results are recovered).

An Array corresponding to the list of layers on which results are to be recovered. This Array can be void. If not void, its elements must be String or integer objects.

Some of the arguments given above are optional. For example, the function can be called with 3 or 4 arguments only.

When the fourth argument is a Result or a ResKeyList object, the function must have exactly four arguments. Then, it returns a new Result obtained by extracting the pairs of key and values on the keys of the Result or ResKeyList argument.

Valid calls to the function are illustrated below:

res=db.getResultCopy(lcName,scName,resName,"ElemCenters",

targetGrp,layersList)

res=db.getResultCopy(lcName,scName,resName,"NodesOnly")

res=db.getResultCopy(lcName,scName,resName,layersList)

res=db.getResultCopy(lcName,scName,resName)

res=db.getResultCopy(LcName,ScName,ResName,targetRes)

res=db.getResultCopy(LcName,ScName,ResName,targetRkl)

res=db.getResultCopy(lcName,scName,resName,"ElemCenters",

targetGrp)

In the third example above, the extraction is done on the list of layers only, no selection is done for the elements or nodes. In the fourth example, a copy of the Result stored in the DataBase is returned without selection on a list of elements, nodes, layers or sub-layers..

When the method has four arguments, the fourth one is interpreted as a selection method if it is a String. As no extraction Group argument is provided, the extraction is done on all nodes or elements. Also, when four arguments are provided, a single layer cannot be specified as a String argument. It shoud be specified as an Array of Strings. For example:

res=db.getResultCopy(lcName,scName,resName,["Z1"])

is valid, but:

res=db.getResultCopy(lcName,scName,resName,"Z1")

is not.

Methods devoted to the importation of Results from binary Results files are specific to the peculiar solver that produced the Results. These methods are described in Part III.

Four singleton methods allow to enable or disable partially or totally the reading of composite layered Results from finite element result files. These methods influence the behavior of methods defined in the “specialized” versions of the “DataBase” class. (See Part III.) The four methods are:

“enableLayeredResultsReading” enables the reading of layered laminate Results (stresses, strains, failure indices...). This method has no argument. Actually, this method is used to re-enable the reading of layered laminate Results as this reading is enabled by default.

“disableLayeredResultsReading” disables the reading of layered laminate Results (inverse of the previous method). Again, the method has no argument.

“enableSubLayersReading” enables some sub-layers for the reading of composite layered Results from result files. The method has one argument: a String or an Array of Strings chosen among “Bottom”, “Mid” and “Top”. Here again, as by default all the sub-layers are enabled, the method is rather a “re-enabling” method.

“disableSubLayersReading” disables some sub-layers for the reading of composite layered Results from result files. The method has one argument: a String or an Array of Strings chosen among “Bottom”, “Mid” and “Top”.

By default the reading of layered composite Results is enabled for all sub-layers. The disabling may help to reduce the size of Results stored in specialized DataBases. Actually, the reading of composite results is no longer mandatory as most composite results can be produced with the appropriate methods of the “CLA” classes (Part II).

When a Samcef banque is read into a DataBase, the abbreviations defined in the Samcef model are read as well and stored into the Samcef DataBase in a mapping of string objects. Five methods allow the manipulation of abbreviations stored in the DataBase:

“clearAbbreviations” has no argument and clears all the abbreviations stored into the DataBase.

“addAbbreviation” adds one abbreviation to the DataBase. The method has two String arguments: the key and the value.

“addAbbreviations” adds a list of abbreviations to the DataBase. The method has one argument: A Hash object containing the correspondence between keys and values. Each pair is of course a pair of String objects.

“NbrAbbreviations” attribute has no argument and returns the number of abbreviations stored in the DataBase.

“getAbbreviation” returns the String value of one abbreviation. The method has one String argument: the key of the abbreviation.

“checkAbbreviationExists” returns “true” if the abbreviation exists. The method has one String argument: the key of the abbreviation.

“getAbbreviations” returns a Hash object containing all the abbreviations stored in the DataBase. This method has no argument.

Note that, even though no abbreviation is defined in other solver models, the abbreviation methods defined in DataBase class can also be used when one works with all models. This is why the methods listed above are defined in generic “DataBase” class and not in “SamcefDb” class described in Chapter III.2.

The DataBase Class provides one method that returns a ClaDb object corresponding to the materials, plies and Laminates stored in the DataBase. This method is called “getClaDb” and has no argument. The units associated to this ClaDb object are the default units as defined in Table II.1.4. If the finite element model is defined in another unit system, it is the responsibility of the user to define correctly the units of the ClaDb database and of all its entities using the method “setUnitsAllEntities” of ClaDb class. (See section II.2.4.)

Another method corresponding to the calculation of Results related to laminate load response has been added. This method called “calcFiniteElementResponse” has the same arguments as the corresponding method defined in the “ClaLam” class. The method and the meaning of its arguments are described in section II.4.8. The method defined in “DataBase” class differs from the one defined in “ClaLam” class by the fact that the algorithm tries to retrieve the Laminate corresponding to the element to which Result values are attached. The information is found in the DataBase object. More precisely, The algorithm performs as follows:

The property ID or laminate ID corresponding to the element is identified. Then, the algorithm tries to retrieve a laminate with the same ID from the ClaDb argument.

If a laminate object has been identified and extracted, the algorithm performs the same operations has for the method described in section II.4.8.

Otherwise, no Result values are produced for the current key and one tries the next one.

Similarly, one defined the method “calcFiniteElementCriteria” which has exactly the same arguments and outputs as the corresponding method of “ClaLam” class described in section II.4.8. The difference between the two methods resides in the fact that the method in DataBase class retrieves the ClaLam object corresponding to the element to which the tensorial values are attached.

Finally, a third method allows to retrieve laminate engineering properties in the format of Result objects. The method “calcFemLamProperties” has three arguments:

A ClaDb object which is used for the calculations of the different laminate properties.

A ResKeyList object that corresponds to the finite element entities for which values shall be inserted in Result object. Note that the produced Result object are non layered. (Only the ElemId and NodeId of the ResKeyList keys matter.)

A Hash with String keys and values corresponding to the requests. The key corresponds to the name by which the returned Result shall be referred. The value corresponds to the laminate engineering property that is requested. Presently, possible values of this parameter are: “E_f_xx”, “E_f_yy”, “E_k0_xx”, “E_k0_yy”, “E_xx”, “E_yy”, “G_f_xy”, “G_k0_xy”, “G_xy”, “nu_f_xy”, “nu_f_yx”, “nu_k0_xy”, “nu_k0_yx”, “nu_xy”, “nu_yx”, “thickness”, “surfacicMass”, “averageDensity”.

The method returns a Hash with String keys and Result values.

An example of use of this method follows:

...

compDb=db.getClaDb

res=db.getResultCopy("COORD","coord","coordinates")

rkl=res.extractRkl

requests={}

requests["res1"]="thickness"

requests["res2"]="E_xx"

requests["res3"]="E_yy"

resList=db.calcFemLamProperties(compDb,rkl,requests)

resList.each do |id,res|

Util.printRes(STDOUT,id,res)

end

...

For the different “finite element” methods listed above, the units considered for the returned “Result” objects are the units of the “ClaDb” object argument. This characteristic differs from the behavior of the corresponding methods in “ClaLam” class.

One describes here the iterators of the generic DataBase class only. The iterators of the specialized versions of the class are described in Part III.

“each_abbreviation” loops on the abbreviations stored in the DataBase. It produces pairs of Strings corresponding to the name of the abbreviation, and to the corresponding value respectively. Again, one insists on the fact that this iterator is defined in generic DataBase class.

“each_groupName” loops on the Groups stored in the DataBase and produces String elements containing Group names.

The iterator “each_resultKey” produces Arrays containing the keys to which stored Results are associated. This iterator can be used in two different ways. Either:

db.each_resultKey do |lcName,scName,tpName|

...

end

or:

db.each_resultKey do |resKey|

...

end

In the second case, resKey is an Array containing three Strings.

The following methods iterate on the CaseId, SubCaseId and ResultId corresponding to the Results stored in the DataBase:

Each iterator produces String elements.

“each_resultKeyLcScId” iterator produces the pairs of load case names and subcase names for which Results are stored in the DataBase. This iterator can for example be used as follows:

db.each_resultLcScId do |lcName,scName|

...

end

However, if a single argument is passed in the block, it corresponds to an Array of two Strings.

A few more methods with general purpose are defined:

These methods are defined in the generic “DataBase” class.

The “writeGmsh” method defined in generic “DataBase” class is used to create a Gmsh result file in which parts of the model and of the Results are saved for later visualization. The purpose of the method is to allow the user to visualize parts of the model and Results. An example of use of the method is as follows:

db.writeGmsh("brol.gmsh",0,[[res,"stress","ElemCenters"],\

[displ,"displ","Nodes"]],\

[[skelGrp,"mesh slat"]],\

[[skelGrp,"skel slat"]])

The method has six parameters:

A string containing the name of the file in which the model and Results will be output.

An integer corresponding to the id of a coordinate system in which the positions are located and in which the components of Result values are expressed. The coordinate system must be defined in the dataBase db and must be a rectangular one.

An Array containing the Results to be stored in Gmsh file. Each element of the Array is An Array of three elements:

A Result object.

A String corresponding to the name with which the Result shall be referenced in Gmsh.

A String that can have five values: “ElemCenters”, “ElemCorners”, “Elements”, “Nodes”, “ElemCenterPoints” and “ElemNodePoints”. It corresponds to the location of values that are extracted from the Result object to be printed in the Gmsh file. Note:

“ElemCenterPoints” prints the values at center of elements but on a point, no matter the topology of the element. This may be handy for the visualization of Results on zero length elements.

“ElemNodePoints” prints the values at nodes of elements elements on points, no matter the topology of the element.

“Elements” output location combines the outputs at “ElemCorners” and “ElemCenters”. If no value is found at a corner, the algorithm checks whether an output is found at center of element, and uses that value if it is found.

An Array containing the Meshes to be stored in Gmsh file. Each element of the Array is An Array of two elements:

A Group object. The elements contained in the Group will be output in the Gmsh file.

A String corresponding to the name with which the mesh shall be referenced in Gmsh.

An Array containing the parts of the model for which a “skeleton” shall be saved in the Gmsh file. (A skeleton is a representation of the mesh with only a few edges.)

A Group object. The elements contained in the Group will be output in the Gmsh file.

A String corresponding to the name with which the skeleton shall be referenced in Gmsh.

A logical parameter specifying whether a binary output is requested. If the parameter is “true” a binary output is done, otherwise, the output is an ASCII one. The parameter is optional and binary output is the default. A binary output is significantly faster than an ASCII one.

Parameters 3, 4 and 5 are optional. They can be a void Array or replaced by nil argument. All the last nil parameters may be omitted. Parameter 6 is optional too. If no pair of key-values is found for a Result to be printed. Nothing is output in the gmsh file.

It is the responsibility of the user to provide Results that associate values to a single valid key. Otherwise, an error message is issued and exception is thrown. In particular, as Results written in GMSH files are not layered, the user should be careful not to output multi-layered Results. The details in error message output are controlled by the debugging verbosity level. (See I.6.6.)

Note also that if the values that the user try to output are not correct, a substitution is done: Inifinite float values are replaced by MAXFLOAT value, NaN values are replaced by MINFLOAT value. (Of course it is advised to output Result objects with valid values.)

The “writeGmshMesh” method defined in generic “DataBase” class saves a Gmsh mesh file. The method has up to four arguments (last argument is optional):

A String containing the name of the file in which the mesh is output.

An integer argument corresponding to the coordinate system in which the nodes are expressed.

A Group corresponding to the entities to be saved in the mesh file.

An optional Boolean argument specifying whether the mesh is output in binary format. The default value of the argument is “true” and corresponds to a binary output.

An example of use follows:

db.writeGmshMesh("brol.msh",0,skelGrp,false)

It may be practical to manipulate coordinate systems at post-processing level. Therefore, a “CoordSys” class devoted to the manipulation of coordinate systems is proposed. The methods defined in that class are described in sections I.2.2 and I.2.5. A list of the methods defined in “CoordSys” class is given in Table I.2.1.

| Method Name | Description | Example |

| Creation and initialization methods

| ||

| new (s) | I.2.2 | IV.2.4.5 |

| initialize | I.2.5 | |

| clone | I.2.5 | |

| Definition

| ||

| initWith3Points | I.2.2.1 | IV.2.4.5 |

| initWithOV1V2 | I.2.2.2 | |

| initWithOV2V3 | I.2.2.2 | |

| initWithOV3V1 | I.2.2.2 | |

| initWithOV2V1 | I.2.2.2 | |

| initWithOV3V2 | I.2.2.2 | |

| initWithOV1V3 | I.2.2.2 | |

| updateDefWrt0 | I.2.2.2 | |

| Modifying point coordinates

| ||

| changeCoordsA20 | I.2.3.1 | IV.2.4.5 |

| changeCoords02B | I.2.3.2 | IV.2.4.5 |

| changeCoordsA2B | I.2.3.3 | IV.2.4.5 |

Modifying vector or tensor components

| ||

| changeCompsA20 | I.2.4.1 | IV.2.4.5 |

| changeComps02B | I.2.4.2 | IV.2.4.5 |

| changeCompsA2B | I.2.4.3 | IV.2.4.5 |

| Printing

| ||

| to_s | I.2.5 | |

| Attributes

| ||

| Id | I.2.5 | |

A CoordSys object corresponds to a coordinate system. CoordSys objects are generally created in a DataBase when a model is imported.

Besides the data corresponding to the definition of the coordinate system, the CoordSys object also contains a definition of the coordinate system wrt the most basic coordinate system “0”. The corresponding member data are used by functions like the Result methods of modification of reference coordinate systems to perform the transformations of components (sections I.4.6.7 and I.4.6.8). Practically those functions work in two steps:

The components of the Result object are expressed wrt the basic coordinate system “0”.

Then, the components are expressed wrt the new coordinate system.

The definition of the corresponding member data is done by calling the method “updateDefWrt0” (section I.2.2.3).

Besides the “new” class method that returns a new coordinate system initialized to the “0” structural coordinate system, several functions can be used to modify the CoordSys objects.

“initWith3Points” method is used to define a coordinate system with the coordinates of three points A, B and C. (See the definition of “CORD2C”, “CORD2R” and “CORD2S” in [Sof04b].) This function has 5 arguments:

A string argument corresponding to the type of coordinate system being build. Three values are accepted: “CORDC”, “CORDR” and “CORDS”. (Remark that the “2” of Nastran has disappeared.)

A DataBase object that will allow the definition of coordinate system wrt the base coordinate system.

An integer argument corresponding to the reference coordinate system (coordinate system wrt which the coordinates of points A, B and C are given). A coordinate system corresponding to this integer must be defined in the DataBase passed as previous argument.

A vector containing the coordinates of point A. (Point A corresponds to the origin of the coordinate system.)

A vector containing the coordinates of point B. (Point B defines the axis Z of the coordinate system. More precisely, point B is on axis Z.)

A vector containing the coordinates of point C. (Point C defines the axis X of the coordinate system. More precisely, the axis X of the coordinate system is defined in the half-plane defined by the straight-line AB and the point C.)

The three vectors mentioned above are actually Arrays of three real values corresponding to the coordinates of points given in coordinate system identified by the integer argument.

Note that no check is made in a DataBase to ensure that the data are consistent. (For example, checking that the reference coordinate system exists.)

The three methods are “initWithOV1V2”, “initWithOV2V3”, “initWithOV3V1”, “initWithOV2V1”, “initWithOV3V2” and “initWithOV1V3”. They produce CoordSys objects defined by their origin and two direction vectors corresponding to vector and to the orientation of vector respectively.

The six arguments of these methods are:

A string argument corresponding to the type of coordinate system being build. Three values are accepted: ‘CORDC”, “CORDR” and “CORDS”.

A DataBase argument that provides the information needed to complete the definition of the coordinate system.

An integer argument corresponding to the reference coordinate system (coordinate system wrt which the origin and direction vectors are specified).

A vector containing the coordinates of the origin. This origin is specified with an Array of three real values corresponding to the components of O wrt the reference coordinate system identified with the integer argument.

A vector (Array of three real values) corresponding to the direction of the coordinate system. The components of the vector are given wrt to the reference coordinate system (estimated at point if the reference coordinate system is curvilinear).

A vector (Array of three real values) corresponding to the orientation of base vector of the coordinate system. The components of the vector are given wrt to the reference coordinate system (estimated at point if the reference coordinate system is curvilinear).

Note that the orientation vector is not necessarily orthogonal to . If the vectors are not orthogonal, then is a unit vector parallel to , and vector is the unit vector perpendicular to closest to . The last vector of the coordinate system is a unit vector perpendicular to both and .

Here again, no check is made in a DataBase to ensure that the data are consistent. (For example, checking that the reference coordinate system exists.)

“updateDefWrt0” method updates the definition of a CoordSys object wrt to “0” (most basic coordinate system). This function has one argument: the DataBase in which the information needed to build the definition wrt 0 is found.

Note that if one works with several DataBases, the responsibility of managing the correspondence of coordinate systems and DataBases lies on the user.

The “CoordSys” class defines three methods devoted to the transformation of a point coordinates from one coordinate system to another.

Method “changeCoordsA20” is used to calculate the coordinates of a point wrt basic or “0” coordinate system:

The coordinate system on which the method is called is the coordinate system in which the initial coordinates of the point are defined. (Coordinate system “A”.)

The method has one “CoordA” argument: an Array of three real values corresponding to the initial coordinates of the point in coordinate system “A”.

The method returns a “Coord0” Array of three real values corresponding to the coordinate of the same point, but expressed wrt basic coordinate system “0”.

The method is called as follows:

coords0=csA.changeCoordsA20(coordsA)

Method “changeCoords02B” is used to calculate the coordinates of a point wrt a given coordinate system “B”:

The coordinate system on which the method is called is the coordinate system in which one wants to express the point coordinates (Coordinate system “B”.)

The method has one “Coord0” argument: an Array of three real values corresponding to the initial coordinates of the point in basic coordinate system “0”.

The method returns a “CoordB” Array of three real values corresponding to the coordinate of the same point, but expressed wrt coordinate system “B”.

The method is called as follows:

coordsB=csB.changeCoords02B(coords0)

Method “changeCoordsA2B” is used to calculate the coordinates of a point wrt a given coordinate system “B”. The initial coordinate system is a given “A” coordinate system:

The coordinate system on which the method is called is the initial coordinate system in which the point coordinates are expressed (Coordinate system “A”.)

The first “CoordA” argument is an Array of three real values corresponding to the initial coordinates of the point in coordinate system “A”.

The second “CsB” argument is a “CoordSys” object wrt which one wants to calculate the point new coordinates.

The method returns a “CoordB” Array of three real values corresponding to the coordinate of the point expressed wrt coordinate system “B”.

The method is called as follows:

coordsB=csA.changeCoordsA2B(coordsA,csB)

The “CoordSys” class defines three methods devoted to the transformation of a vector or tensor components from one coordinate system to another. These methods are similar to the methods used to transform point coordinates in section I.2.3 but with the following differences:

One modifies the components of a vector or of a tensor.

A vector is defined as an Array of three real values. A tensor is defined as an Array of three Arrays of three real values.

If a vector argument is given, the method returns a vector. If a tensor argument is given, the method returns a tensor.

For each of the methods given here, the coordinates of the point at which the vector or tensor argument is defined, are also given as argument. This means that the methods have one additional argument compared to the corresponding methods of section I.2.3.3. The position of the point matters when curvilinear coordinate systems are involved in the transformation.

Method “changeCompsA20” is used to calculate the components of a vector or tensor wrt basic or “0” coordinate system:

The coordinate system on which the method is called is the coordinate system in which the initial components are defined. (Coordinate system “A”.)

The first “CoordA” argument is an Array of three real values corresponding to the coordinates of the point in coordinate system “A”.

The second “vmA” argument corresponds to the components of vector or tensor (matrix) in coordinate system “A”. (An Array of three real values, or an Array of Arrays of three real values.)

The method returns the components of a vector or tensor, but expressed wrt basic coordinate system “0”. (An Array of three real values, or an Array of Arrays of three real values.)

The method is called as follows:

vm0=csA.changeCompsA20(coordsA,vmA)

Method “changeComps02B” is used to calculate the components of a vector or tensor wrt basic or “B” coordinate system:

The coordinate system on which the method is called is the coordinate system in which one wants to express the components. (Coordinate system “B”.)

The first “Coord0” argument is an Array of three real values corresponding to the coordinates of the point in coordinate system “0”.

The second “vm0” argument corresponds to the components of vector or tensor (matrix) in coordinate system “0”. (An Array of three real values, or an Array of Arrays of three real values.)

The method returns the components of a vector or tensor, but expressed wrt basic coordinate system “B”. (An Array of three real values, or an Array of Arrays of three real values.)

The method is called as follows:

vmB=csB.changeCoords02B(coords0,vm0)

Method “changeCompsA2B” is used to calculate the components of a vector or tensor wrt basic or “B” coordinate system:

The coordinate system on which the method is called is the coordinate system in which the initial components are defined. (Coordinate system “A”.)

The first “CoordA” argument is an Array of three real values corresponding to the coordinates of the point in coordinate system “A”.

The second “vmA” argument corresponds to the components of vector or tensor (matrix) in coordinate system “A”. (An Array of three real values, or an Array of Arrays of three real values.)

The third “CsB” argument is a “CoordSys” object wrt which one wants to calculate the new components.

The method returns the components of a vector or tensor, but expressed wrt basic coordinate system “B”. (An Array of three real values, or an Array of Arrays of three real values.)

The method is called as follows:

vmB=csA.changeCoordsA2B(coordsA,vmA,csB)

One gives here a list of functions that do not fit in any category listed above.

Method “initialize” initializes or clears a CoordSys object. After initializing, the definition corresponds to the “0” structural coordinate system.

“clone” method returns a Copy of the CoordSys object to which it is applied.

“to_s” method is used for printing the Result object.

“Id” integer attribute corresponds to the integer identifier of the CoordSys object. One defines a “setter” and “getter” attribute (“Id=” and “Id” methods respectively).

The “Group” corresponds to the Patran notion of group. Group objects can be stored in a DataBase object, retrieved from it and manipulated outside the DataBase. One describes here the manipulation methods outside the DataBase class.

A list of the methods defined in “Group” class is given in Table I.3.1.

| Method Name | Description | Example |

| Creation and initialization methods

| ||

| new (s) | I.3.2 | IV.2.5.1 |

| initialize | I.3.7 | |

| clone | I.3.7 | |

| Identification (attributes)

| ||

| Name= | I.3.7 | IV.2.2.3 |

| Name | I.3.7 | |

| Manipulation of entities

| ||

| getEntitiesByType | I.3.3 | IV.2.2.2 |

| getNbrEntities | I.3.3 | |

| getNbrEntitiesByType | I.3.3 | IV.2.2.2 |

| setEntities | I.3.3 | IV.2.5.1 |

| setEntitiesByType | I.3.3 | |

| addEntities | I.3.3 | |

| addEntitiesByType | I.3.3 | |

| removeEntities | I.3.3 | |

| removeEntitiesByType | I.3.3 | |

| clearAllEntitiesByType | I.3.3 | |

| matchWithDbEntities | I.3.3 | IV.2.5.1 |

| importEntitiesByType | I.3.3 | |

| containsEntity | I.3.3 | |

| Operators

| ||

| + | I.3.4 | IV.2.5.1 |

| - | I.3.4 | |

| * | I.3.4 | IV.2.2.4 |

| / | I.3.4 | |

| BLOBs for SQL

| ||

| toBlob | I.3.5 | |

| fromBlob | I.3.5 | |

| Iterators

| ||

| each_element | I.3.6 | |

| each_rbe | I.3.6 | |

| each_node | I.3.6 | IV.2.2.2 |

| each_coordsys | I.3.6 | |

| Other methods

| ||

| to_s | I.3.7 | |

| Attribute readers

| ||

| NbrElements | I.3.7 | |

| NbrNodes | I.3.7 | |

| NbrRbes | I.3.7 | |

| NbrCoordsys | I.3.7 | |

A Group is characterized by its name (a String object) and the entities it contains. Four type of entities can be contained in a FeResPost Group: coordinate systems, nodes, elements and rigid body elements (RBEs). At C++ level, for each type of entity, the class group manages a set of integers corresponding to the identifiers of the entities.

Part of the operations dealing with Groups are done by methods defined in DataBase class. The methods of DataBase specially devoted to operations mainly related to Groups are described in section I.1.2.

The singleton method “new” is used to create Group objects.

The class “Group” provides a large choice of methods devoted to the manipulation of the list of entities in its storage. One makes the distinction between operations that modify the content of a Group, and the operations that allow the inspection of this content.

The modification of the Group’s content can be done by calls to the following methods:

“setEntities” takes a String argument that contains the definition of Group entities in a format similar to the format of the Patran Group session file (section I.1.2.1). All entities previously stored in the Group are erased before the conversion of the String argument. The function returns “self” (the modified Group object). As the String argument follows Patran conventions, the list of RBEs follows “MPC” keyword.

“setEntitiesByType” has two arguments. The first argument, “TypeName” is a String object that specifies the type of entities to be added to the Group. This first argument may have four different values: “Node”, “Element”, “MPC” (for rigid body elements) or “CoordSys”. Any other argument is invalid. The second argument may have two different types:

The second argument may be a String object containing the definition of a group in a format similar to the format of the Patran Group session file (section I.1.2.1). However, in this case, only the entities of the type selected by the “TypeName” argument are stored in the Group.

The second argument may also be an Array of integers. Then the integers correspond to the entities added to the Group. The type of entities is specified by the “TypeName” argument.

As for the “setEntities” method, all entities previously stored in the Group are erased before inserting the new entities.

“addEntities” is identical to “setEntities” but the entities stored in the Group are not erased before adding the new entities. As the String argument follows Patran conventions, the list of RBEs follows “MPC” keyword.

“addEntitiesByType” is identical to “setEntitiesByType” but the entities stored in the Group are not erased before adding the new entities.

“removeEntities” is identical to “addEntities” but the selected entities are removed from the Group instead of being added to it. As the String argument follows Patran conventions, the list of RBEs follows “MPC” keyword.

“removeEntitiesByType” is identical to “addEntitiesByType” but the selected entities are removed from the Group instead of being added to it.

“clearAllEntitiesByType” has one String argument corresponding to the type of entities to be cleared from the Group. Possible arguments are “Node”, “Element”, “MPC” (for rigid body elements) or “CoordSys”.

“importEntitiesByType” is used to insert entities in a Group by importing them from another Group, or from the entities stored in a DataBase model. The method has two or four arguments:

The first argument is a String corresponding to the type of entities to be inserted. Possible arguments are “Node”, “Element”, “MPC” (for rigid body elements) or “CoordSys”.

The Second argument is either a Group, or a DataBase.

the third and four arguments are integers corresponding to the range of insertion (idMin and idMax). If omitted, then all entities of the corresponding type are inserted.

“matchWithDbEntities” is used to ensure that a Group contains only entities that are defined in a DataBase. The function has one DataBase argument. After calling this method, the entities that do not exist in the DataBase have been cancelled from the Group too.

Presently, four methods devoted to the manipulation of entities and not modifying the Group have been defined:

“getEntitiesByType” receives one String argument corresponding to the type of entity and returns an Array of integers containing the corresponding entity indices. The four possible values of the argument are “Node”, “Element”, “MPC” (for rigid body elements) and “CoordSys”.

“getNbrEntitiesByType” receives one String argument corresponding to the type of entity and returns an integer corresponding to the number of entities of the specified type contained in the Group. The four possible values of the argument are “Node”, “Element”, “MPC” (for rigid body elements) and “CoordSys”.

“getNbrEntities” has no argument and returns an Array of four integer corresponding to the number of entities of each of the following types, and in the same order as: “CoordSys”, “Element”, “Node” and “MPC” (for rigid body elements).

“containsEntity” allows to check whether a given entity belongs to the Group. The method has two arguments:

The first argument is a String corresponding to the type of entities to be inserted. Possible arguments are “Node”, “Element”, “MPC” (for rigid body elements) or “CoordSys”.

The second integer argument is the entityId.

The function returns a bool value.

A FeResPost Group cannot contain Nastran MPCs.

Eight such operators have been defined. One first explains the meaning and behavior of the four elementary dyadic operations.

“/” operator: if and are two Groups, then . (The operation is equivalent to a logical “exclusive or” operation on the entities.)

Group objects can be saved in SQL database as “BLOB” objects.

Two methods are defined in Group class to convert object to and from Blobs:

“toBlob” has no argument and returns the BLOB in a String object.

“fromBlob” has one String argument corresponding to the BLOB, and initializes the Group according to Blob content.

The class “Group” provides four iterators:

These iterators iterate on the corresponding entities stored in the Group object. They produce Integer values that are passed to the block.

One gives here a list of methods that do not fit in any category listed above:

Method “clone’ returns a Copy of the Group object to which it is applied.

Attribute “Name” returns a String containing the name of the Group.

Attribute “Name=” has one String argument and sets the name of the Group.

Attribute “NbrElements” returns an integer containing the number of elements stored in the Group.

Attribute “NbrNodes” returns an integer containing the number of nodes stored in the Group.

Attribute “NbrRbes” returns an integer containing the number of RBEs stored in the Group.

Attribute “NbrCoordsys” returns an integer containing the number of coordinate systems stored in the Group.

The “Name” and “Name=” methods correspond to the “Name” attribute.

The “Result” class is devoted to the manipulation of finite element Results. Examples of Results are stress tensor on volumic or surfacic elements, displacements, grid point forces,... The ruby class “Result” is a wrapping around the C++ class “Result”.

Results can be read from various solver binary files. See Part III for more information.

The “Result” class allows the storage and manipulation of Real as well as Complex values. Note however that several of the methods of Result class do not allow the manipulation of Complex Results. Therefore, indications are inserted here and there in this Chapter to provide information about the “Complex capabilities” of the different methods.

An important comment must be done: Even though the results can be imported into a DataBase, this does not mean that the manipulation of the results is correct. Indeed, all manipulation that involve transformation of coordinate systems can be incorrect because geometric non-linearities are not taken into account. Methods that can be affected by this limitation are for example: “modifyRefCoordSys”, “modifyPositionRefCoordSys” and “calcResultingFM”.

A list of the methods defined in “Result” class is given in Table I.4.1.

Basically, a Result may be considered as a mapping between “keys” and “values”. These two concepts are discussed in sections I.4.1.1 and I.4.1.2 respectively.

Otherwise several member data of Result objects can be accessed at ruby level. This is the case for Result name, integer and real identifiers. Those are discussed in section I.4.1.3.

The “keys” of Results correspond to the entities to which “values” are associated. For example, a key may be:

The index of an element.

The index of a node.

A pair of integers corresponding to the indices of an element and of a node (for Results given at corners of elements).

A pair of integers corresponding to the indices of an element and of a layer (for example, for layered Results corresponding to laminated properties).

...

So, at C++ level, each key is characterized by four integers:

A 32bits integer corresponding to the element index,

A 32bits integer corresponding to the node index,

A 32bits integer corresponding to the layer index,

An 8bits char corresponding to the sub-layer index (rarely used).

At ruby level, one can work with either the C++ integer ids, or their string correspondent. The correspondence between string and integers are given in Tables I.4.2, I.4.3, I.4.4 and I.4.5. The data given in these Tables can be completed by additional data peculiar to the different supported solvers. (See Part III for more information.)

In Table I.4.4, the last layers IDs cannot be attributed to Result keys. The elements corresponds to groups of layers and are used to perform extraction operations:

“Beam Points” is used to extract on layers “Point A”, “Point B”,...

“Shell Layers” is used to extract on layers “NONE”, “Z1” and “Z2”.

“All Plies ” is used to extract on all layers with positive Ids (i.e. laminate plies).

“All Layers ” extracts on all layers.

| No element association | |

| "NONE" | -1 |

For Results associated to elements | |

| "elem 1" | 1 |

| "elem 2" | 2 |

| "elem 3" | 3 |

| "elem ..." | ... |

| No node association | |

| "NONE" | -999 |

For Results associated to nodes | |

| "node 1" | 1 |

| "node 2" | 2 |

| "node 3" | 3 |

| "node ..." | ... |

| For unlayered Results

| |

| NONE | -999 |

| Undefined layer

| |

| UNDEF | -300 |

| For stress recovery in bars and beams

| |

| "Point A" | -201 |

| "Point B" | -202 |

| "Point C" | -203 |

| "Point D" | -204 |

| "Point E" | -205 |

| "Point F" | -206 |

| For 2D elements | |

| "Z0"1 | -100 |

| "Z1" | -101 |

| "Z2" | -102 |

| For Results in laminates (positive layers)

| |

| "layer 1" | 1 |

| "layer 2" | 2 |

| "layer 3" | 3 |

| "layer ..." | ... |

| Group of layers for extraction operations

| |

| "Beam Points" | -2001 |

| "Shell Layers" | -2002 |

| "All Plies" | -2003 |

| "All Layers" | -2004 |

| "NONE" | 0 |

| "All Sub-Layers" | 50 |

| "Bottom" | 101 |

| "Mid" | 102 |

| "Top" | 103 |

Note that the notion of “key” is also closely related to the “ResKeyList” ruby class which is simply a list of key objects (see Chapter I.5).

The values of Result are characterized by an integer value (32 bits integer) and one or several real values. The integer value corresponds to the coordinate system into which the components are expressed:

-9999 means that the results are not attached to a coordinate system. Their value corresponds to String “NONE”.

-2000 means that the values are expressed in a user defined coordinate system. This means a coordinate system which is not identified by an integer to be later retrieved from a DataBase. The corresponding String is “userCS”.

-1000 means that the values are expressed in a coordinate system projected on surfacic elements. This means also that the values are no longer attached to a peculiar coordinate system defined in a DataBase. The corresponding String is “projCS”.

-6 means the laminate coordinate system. The corresponding String is “lamCS”.

-5 means the patran element IJK coordinate system which correspond to the element coordinate system for most finite element software. The corresponding String is “elemIJK”.

-4 means the ply coordinate system when the element has laminated properties. The corresponding String is “plyCS”.

-3 means the material coordinate system. The corresponding String is “matCS”.

-2 means the nodal analysis coordinate system. Values must then be attached to a node (nodeId of key). The corresponding String is “nodeCS”.

-1 means the element coordinate system. The corresponding String is “elemCS”.

Any integer greater than or equal to zero: a coordinate system defined in a DataBase object. “0” denotes the base Cartesian coordinate system.

Obviously, for several types of coordinate system, the values must be attached to an element to make sense. This is the case for “elemIJK”, “plyCS”, “matCS”, “elemCS”,...

The real values correspond to the components:

A “vectorial” Result (res.TensorOrder=1) has three components named “X”, “Y” and “Z” respectively.

A “tensorial” Result (res.TensorOrder=2) has normally nine components. However, as all the tensors with which one deals are symmetric, only six components are stored: “XX”, “YY”, “ZZ”, “XY”, “YZ”, “ZX”.

A “FieldCS” Result (res.TensorOrder=-10) has nine components. The Result components corresponds to the components of the three direction vectors associated to each key and are given in the followinf order: “1X”, “1Y”, “1Z”, “2X”, “2Y”, “2Z”, “3X”, “3Y”, “3Z” if the three direction vectors are V1, V2 and V3. (See section I.4.1.5.)

Note that the name of the components given above matter, as they may be used to extract a single component out of a vectorial or tensorial Result. For Complex Result, the numbers of components mentioned above is multiplied by two. They are presented in the following order:

First all the Real or Magnitude components are presented.

Then all the Imaginary or Angular components follow. The angular components are expressed in (Nastran convention).

The components are stored in single precision real values (float coded on 32 bits). This means that there is no advantage at using double precision real values in your programming as far as the manipulation of results is concerned.

Besides the mapping from key to values, the Result objects contain information that allow their identification. The identification information is:

The name of the object (a String). This name can be set or retrieved with methods “Name=” and “Name”. These methods correspond to “Name” attribute.

Two integer identifiers that may contain information like the load case id, the mode number,... These member data can set or retrieved with methods “setIntId” and “getIntId”.

Two real identifiers that may contain information related to the time for a transient calculation, to continuation parameters, eigen-values,... These member data can set or retrieved with methods “setRealId” and “getRealId”.

The methods used to access these member data are described in section I.4.6.1.

A Result object is also characterized by two other integer values:

The tensorial order of the values it stores. This integer may be 0, 1 or 2 corresponding to scalar, vectorial or (order 2) tensorial values. (A Tensorial order of -10 corresponds to the special kind of FieldCS Result. See section I.4.1.5.)

The format of the result. This value may be 1 (Real values), 2 (Complex Result in rectangular format) or 3 (Complex Result in polar format).

Methods used to manipulate these data are described in section I.4.6.1. These two integers are attributes of the class.

“FieldCS” Results is a special kind of Result corresponding to the concept of coordinate system defined element by element, node by node, element corner by element corner, etc. Each key of the Result is associated to a Value of nine components corresponding to the components of three local vectors associated to the key.

When defining the “FieldCS” Result one must be careful:

The TensorOrder associated to the Result object is -10!

Only “real” Format makes sense for this kind of Result.

As explained above the FieldCS Result has 9 components corresponding to the components of the three base vectors associated to each key.

The coordinate system in the values must be zero or positive. No systematic check is done when thev Result is created. (Compliance with this rule depends entirely on the disciplin of FeResPost user.)

In each value, the three vectors corresponding to the nine components must be mutually orthogonal and have unit lengths. Again, FeResPost user is responsible for the compliance with this rule. (No check is done by FeResPost.)

FieldCS Results can be used in a limited number of operations only. Actually, They are meant to be used as coordinate system in modifyRefCoordSys method (section I.4.6.7). Note that the components of a FieldCS Result can also be modified by modifyRefCoordSys method but the new CS ID should be >=0.

Actually, as the function mainly meant to be used in method, better efficiency is generally obtained when values components are expressed wrt CS 0.